It goes without saying that digital technologies have lowered the bar to writing, printing, and publishing books. And, yet, when we think about the future of the book, too often we (historians especially) imagine the book in terms of the large commercial or academic press that follows an age-old process through which authors sit down at a typewriter and peck away at the keyboard, filling page after page of text. What I’ve come to realize, though, is that I’ve come to these problems of the future of the book from a quite different point, a roundabout journey that began without much consideration of either platform or press.

The particular questions that I am presently exploring are specific. How do we deploy an e-publishing solution for mobile interpretive projects powered by Curatescape (+ Omeka)? That problem has transformed my colleagues and partners into publishers, revealing a convergence between public humanities projects and traditional scholarly endeavors. This convergence suggests that as we sprint beyond the book, we should appreciate both the importance of the book’s unique presence as well as the ways in which the book can become enriched by new approaches to the production of knowledge.

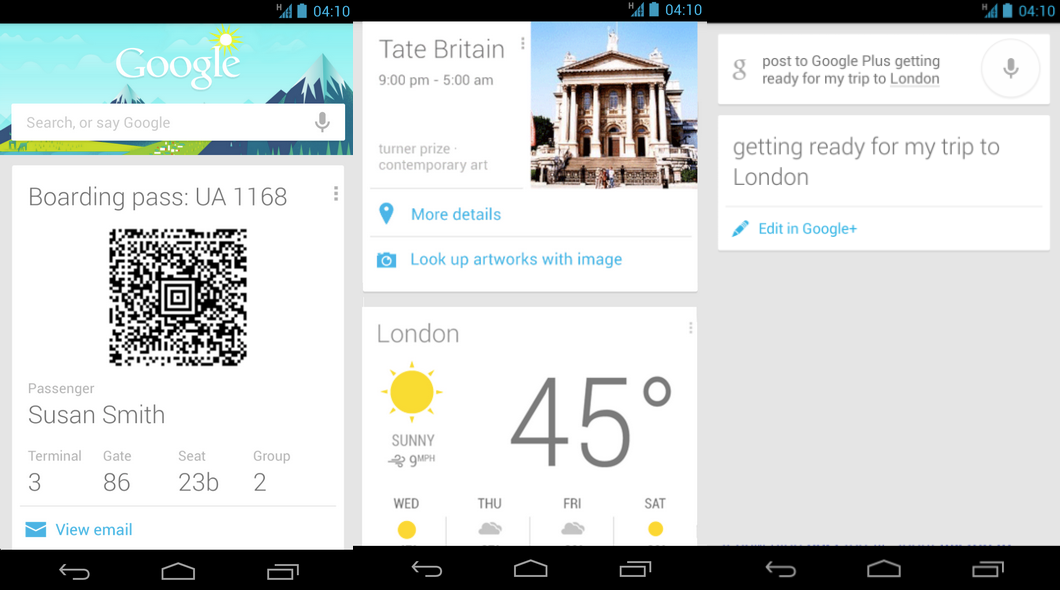

Curatescape, the framework for mobile publishing developed by my research lab, emerged from several professional practices that have converged in the digital age.

Urban and public historians have long been curating landscape, well before the term “curation” was applied as widely as it has been in the digital age. Often emerging out of innovative community-driven teaching, these “local” historians and their students and collaborators studied neighborhoods, communities, and civic spaces. The outcomes of those works—papers, presentations, walking tours, and public history projects—frequently made their way back to the community through interactive projects, featuring dialogues between students and their key informants. That dialogue, framed by historical scholarship and primary source documents, yielded remarkable experiential learning, of the sort that produced civic engagement. This approach has become a standard feature on many university campuses through service learning and experientially-based classroom assignments. The digital age has yielded new ways to feature that work, ranging from blogs to digital archival platforms. Suddenly, we’ve moved from one-off projects to those that can (potentially) build upon one another.

The ability to create shared learning environments led innovators to create standards-based platforms and tools for publishing on the Internet. WordPress, Blogger, and Tumblr are the best-known present surviving tools from this moment, becoming common blogging (or microblogging) software. In the archival world, open-source archival content management systems emerged to help librarians and curators document and share their collections—books, material culture, and photographs. In academic and library settings, tools like Collective Access or Omeka have become commonly used archival systems, emulating blogging platforms in their approach to allowing heritage professionals to engage publics about their important cultural collections.

At the turn of the century, Oral History practice underwent dramatic transformation, driven by the emergence of digital tools for collecting, processing, and archiving oral history. The results accelerated trends underway in the field, away from reliance on written transcripts to mediate what is a deeply human and aural experience. Digital collection of stories democratized oral history by allowing anyone to record narratives. And it made those sound files more sharable than they’d ever been. Coupled with easier indexing, annotating, and archiving, oral history became malleable and could be included easily in the emerging ecosystem of digital humanities projects. Setting aside the work of filmmakers, these trends allowed scholars and documentarians for the first time to widely share human voices as part of their interpretive work. As part of a broader proliferation of interpretive multimedia, the very nature of storytelling has shifted toward layered multimedia presentation.

In 2005, as these trends emerged and I engaged them with students, teachers, and colleagues, I was asked to produce content for history kiosks that would be located along a rapid bus route in Cleveland, Ohio. Our team built elaborate multimedia stories for these kiosks, which appeared on the streets at the very moment of the emergence of the iPhone. Recognizing that such locative technologies promised to transform cities into living museums, our team adapted the kiosk project to mobile devices. Bringing together a series of convergences—in engaged-student learning, open-source content management systems, and digital oral history—our first project, Cleveland Historical, developed as a web-based mobile interpretive project that allowed our team to curate the city through interpretive, layered multimedia stories. Cleveland Historical became the first iteration of Curatescape, a broader framework for mobile curation that uses the Omeka content management system as its core archive. Importantly, we don’t call our work a “platform” but a framework that uses multiple digital tools, content management systems, and standards. We exist within a broader system of knowledge production that is both technical and conceptual.

In building our Cleveland project, as well as working with more than 30 partners to launch their projects, we’ve realized that our teams of students, communities, and scholars are curating landscape through interpretive stories. They’re also publishing rich collections of multimedia stories that engage the landscape in remarkable ways. These projects transform how we experience place, and also provide an avenue for shaping conversations about place.

Critically, our audiences and interpreters also have challenged the boundaries of our community, urging us to produce information feeds to a variety of different formats, including e-books, and even real books. They want to read our interpretive historical stories as collectives, with different sorts of connections to other interpretive projects (both inside and outside the Curatescape system).

Quite suddenly, we’ve found ourselves asking what these travelogues should look like. We’re asking about the role of multimedia, the formats, and the outputs (e-books, print, how to format the RSS feed). We’re just as interested in the use cases: is this for local urban walking tours, thematic books that feature the apps’ tours, aggregations of stories across space—about parks or Civil Rights? The questions of what this might look like, and of what it means to write a book, have challenged our sense of the book itself. What is it that we’re publishing? If it is not a book, what is it? Critically, the convergence of tools, approaches, and materials suggests to me that whatever forms emerge should reflect emerging approaches to systems of knowledge production. Hearkening back to a mythic book as a standard and goal may be the wrong question to ask as we sprint toward the future of the book.